When running a combination of Grafana, Graphite, and Collectd to monitor the entire system on AWS. I’ve encountered a situation where Graphite causes a significantly high I/O pressure on the EBS volume, leading to the need to increase IOPS and throughput, which also means we have to pay more for EBS costs that we shouldn’t have to. I’ll share an experience of migrating Graphite from python-based to golang-based that helps me address the issue and optimise AWS cost as well.

Graphite Overview

Graphite is used as a Data Source in Grafana and not the other way around. For a quick explanation of Graphite, it’s an open source monitoring solution written in Python and time series database (TSDB) for metrics. Graphite basically does two things:

- Store numeric time-series data

- Render graphs of this data based on what we want them to look like. To me, the graphs that we created on Graphite are ugly and have so many limitations which hard to monitor the metrics I want. That’s why we need Grafana here.

Graphite consists of three components:

- Carbon: a data ingestion layer – is built Twisted daemon, it receives metrics and writes them to disk (Whisper).

- Whisper: a simple database library for storing time-series data (received from Carbon) on disk.

- Graphite Webapp: – A Django webapp that renders stored metrics into graphs and images.

Graphite itself doesn’t collect data for us, instead, we have to use other tools to collect and send data metrics to Carbon. I’m using Collectd in this case.

Issue with Graphite

Since upgrading Grafana to the latest version, by that time was 9.x (as the current of this writing, it is version 12.x), I noticed a significant degradation in the performance of the Grafana server. Initially, for an unknown reason, on the EBS volume where Grafana/Graphite/Collectd was running on, the problem was causing tension over a long period of time, which exceeded the default 3000 IOPS/gp3, leading me to be charged for the burst. Therefore, I increased IOPS to 6000 IOPS to cope with the issue temporarily (drives up the cost then) but it didn’t seem to slow down. CPU and Memory also ran at high threshold at the same time, also leading to upgrading the instance type to a higher one (m6g.large).

After investigation, I found out the problem lay in two parts – the first part is RRD plugin on Collectd was enabled, I didn’t need this plugin, so I have turned it off. This reduced IOPS dramatically to nearly 3000 IOPS. It was still high then, and I continued finding that the second part is Graphite-Carbon. Despite upgrading to the latest Graphite version at that time, it didn’t help at all.

Solution

I did some research and happened to find out the go-carbon project, a Golang implementation of Graphite/Carbon server with classic architecture: Agent -> Cache -> Persister. From the introduction, it claims to be faster than the traditional Carbon. This article also claims that Carbonapi (a replacement for graphite-webapp) is faster 5x-10x than graphite-webapp. This got me thinking that it might address the high load issue in I/O, CPU, and Memory that I was encountering then. Therefore, I decided to give this a go and started launching a test to examine the result.

Graphite Carbon architecture design diagram

Source: go-graphite/go-carbon (MIT License)

https://github.com/go-graphite/go-carbon

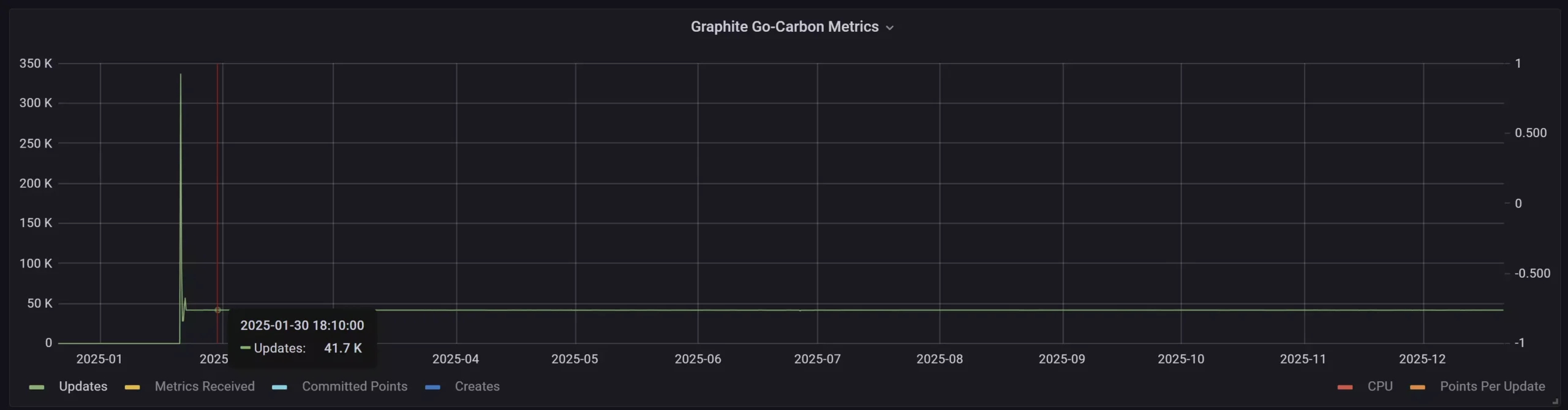

Turned out, go-carbon really becomes much faster than the traditional Carbon, as I could tell. On the test Grafana server with the same instance type m6g.large and an EBS volume with 6000 IOPS, I’ve compared to the old Graphite/Carbon, when performs updateOperations, the python-based Carbon just performs roughly 10k metrics/s while the golang-based Carbon performs around 40k/s (with the same setting for max-updates-per-second = 500). If I set max-updates-per-second to 0 by default, the golang-based Carbon is jumping to 450k metrics/s, which is significantly faster. However, the default setting for this go-carbon made our Write Operation of the EBS volume reach 3000 OPs/s. Still, the Read Operation is just below 1000 OPs/s, so I’ve adjusted the max-updates-per-second setting to 700 to reduce the IOPS usage to approximately 2000 IOPS, and this seems to be a reasonable parameter for us.

Just note that the graph above doesn’t include data from the Python-based Carbon because I cleared the old data before writing this post.

By migrating the Graphite from the python-based to the golang-based, this helped me reduce IOPS allocated to the EBS, also giving me a chance to downgrade the instance type to a smaller one. This resulted in cost savings in the long term, while the performance was not affected, but also improved without spending more on infrastructure. If you have a large instance type and a large EBS with high IOPS, you might benefit from this migration, and this translates to cost savings and performance improvement for your team.

Conclusion

Running Grafana with the Go‑based Graphite backend makes my monitoring site feel much more responsive, eliminating the bottlenecks I had before. More importantly, it resolves the high I/O load issues across disk, CPU, and memory. This way makes the monitoring tool run more efficiently without overspending on infrastructure costs, which aligns with our cost-optimisation strategy.

I know it might sound like saving a little cost, but when running a system on cloud – AWS in my case -the cost management becomes an ongoing challenge, and tends to be caused by countless factors that we want to avoid as much as possible. With the cost optimisation in mind, I think a good approach is to make small, incremental improvements in different aspects, e.g. in EBS, in instance types, in applications themselves, etc. Over time, we gradually cut unnecessary costs overall across the system without impacting its performance. Ultimately, I believe this mindset makes you more valuable not only for your growth but for the place you’re working in.

References

- https://github.com/go-graphite/go-carbon

- https://github.com/go-graphite/carbonapi

- https://gavd.co.uk/2022/11/carbonapi/index.html

- https://graphite.readthedocs.io/en/stable/index.html

Discover more from Turn DevOps Easier

Subscribe to get the latest posts sent to your email.